Ceph Luminous – 12

In the last few days, Ceph published Luminous 12.1.1 packages to their repositories, release candidate of their future LTS. Having had bad experiences with their previous RC, I gave it a fresh look, dropping ceph-deploy and writing my own Ansible roles instead.

Noticeable changes since Luminous include CephFS being -allegedly- stable. I didn’t test it myself yet, although I’ve been hearing about that feature being unstable since my first days testing Ceph, years ago.

Another considerable change that showed up and is now considered stable, is a replacement implementation of Ceph FileStore (relying on ext4, xfs or btrfs partitions), called BlueStore. The main change being that Ceph Object Storage processes would no longer mount a large filesystem storing their data. Beware that recovery scripts reconstructing block devices scanning for PG content in OSD filesystems would no longer work – it is yet unclear how a disaster recovery would work, recovering data from an offline cluster. No surprises otherwise, so far so good.

Also advertised: the RBD-mirror daemon (introduced lately) is now considered stable running in HA. From what I’ve seen, it is yet unclear how to configure HA starting several mirrors in a single cluster – I very much doubt this would work out of the box. We’ll probably have to wait a little longer, for Ceph documentation to reflect the last changes introduced on that matter.

As I had 2 Ceph clusters, I could confirm RadosGW Multisite configuration works perfectly. Now that’s not a new feature, still it’s the first time I actually set this up. Buckets are eventually replicated to remote cluster. Documentation is way more exhaustive, works as advertised: I’ll stick to this, until we learn more about RBD mirroring.

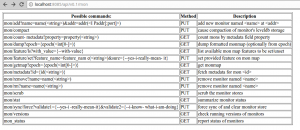

Freshly introduced: the Ceph RestAPI Gateway. Again, we’re missing some docs yet. On paper, this service should allow you to query your cluster as you would have with Ceph CLI tools, via a Restful API. Having set one up, it isn’t much complicated – I would recommend not to use their built-in webserver, and instead use nginx and uwsgi. The basics on that matter could be found on GitHub.

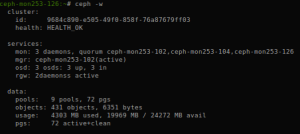

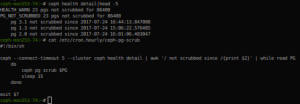

Even though Ceph Luminous shouldn’t reach LTS before their 12.2.0 release, as of today, I can confirm Debian Stretch packages are working relatively well on a 3-MON 3-MGR 3-OSD 2-RGW with some haproxy balancer setup, serving with s3-like buckets. Although note there is some weirdness regarding PG scrubbing, you may need to add a cron job … And if you consider running Ceph on commodity hardware, consider that their last releases may be broken.

edit: LTS released as of late August: SSE4.2 support still mandatory deploying your Luminous cluster, although a fix recently reached their master branch, ….

As of late September, Ceph 12.2.1 release can actually be installed on older, commodity hardware.

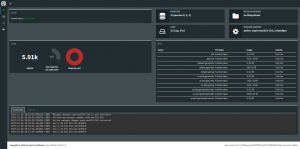

Meanwhile, a few screenshots of Ceph Dashboard were posted to ceph website, advertising on that new feature.