Scaling out with Ceph

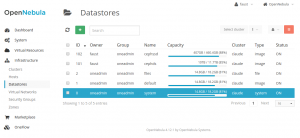

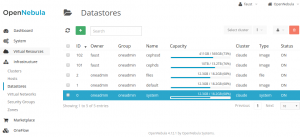

A few months ago, I installed a Ceph cluster hosting disk images, for my OpenNebula cloud.

This cluster is based on 5 ProLian N54L, each with a 60G SSD for the main filesystems, some with 1 512G SSD OSD, all with 3 disk drives from 1 to 4T. SSD are grouped in a pool, HDD in an other.

Now that most my services are in this cluster, I’m left with very few free space.

The good news is there is no significant impact on performances, as I was experiencing with ZFS.

The bad news, is that I urgently need to add some storage space.

Last Sunday, I ordered my sixth N54L on eBay (from my “official” refurbish-er, BargainHardware) and a few disks.

After receiving everything, I installed the latest Ubuntu LTS (Trusty) from my PXE, installed puppet, prepared everything, … In about an hour, I was ready to add my disks.

I use a custom crush map, and the osd “crush update on start” set to false, in my ceph.conf.

This was the first time I tested this, and I was pleased to see I can run ceph-deploy to prepare my OSD, without automatically adding it to the default CRUSH root – especially having two pools.

From my ceph-deploy host (some Xen PV I use hosting ceph-dash, munin and nagios probes related to ceph, but with no OSD nor MON actually running), I ran the following:

# ceph-deploy install erebe

# ceph-deploy disk list erebe

# ceph-deploy disk zap erebe:sda

# ceph-deploy disk zap erebe:sdb

# ceph-deploy disk zap erebe:sdc

# ceph-deploy disk zap erebe:sdd

# ceph-deploy osd prepare erebe:sda

# ceph-deploy osd prepare erebe:sda

# ceph-deploy osd prepare erebe:sdb

# ceph-deploy osd prepare erebe:sdc

# ceph-deploy osd prepare erebe:sdd

At that point, the 4 new OSD were up and running according to ceph status, though no data was assigned to them.

Next step was to update my crushmap, including these new OSDs in the proper root.

# ceph osd getcrushmap -o compiled_crush

# crushtool -d compiled_crush -o plain_crush

# vi plain_crush

# crushtool -c plain_crush -o new-crush

# ceph osd setcrushmap -i new-crush

For the record, the content of my current crush map is the following:

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable straw_calc_version 1

# devices

device 0 osd.0

device 1 osd.1

device 2 osd.2

device 3 osd.3

device 4 osd.4

device 5 osd.5

device 6 osd.6

device 7 osd.7

device 8 osd.8

device 9 osd.9

device 10 osd.10

device 11 osd.11

device 12 osd.12

device 13 osd.13

device 14 osd.14

device 15 osd.15

device 16 osd.16

device 17 osd.17

device 18 osd.18

device 19 osd.19

device 20 osd.20

device 21 osd.21

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 region

type 10 root

# buckets

host nyx-hdd {

id -2 # do not change unnecessarily

# weight 10.890

alg straw

hash 0 # rjenkins1

item osd.1 weight 3.630

item osd.2 weight 3.630

item osd.3 weight 3.630

}

host eos-hdd {

id -3 # do not change unnecessarily

# weight 11.430

alg straw

hash 0 # rjenkins1

item osd.5 weight 3.630

item osd.6 weight 3.900

item osd.7 weight 3.900

}

host hemara-hdd {

id -4 # do not change unnecessarily

# weight 9.980

alg straw

hash 0 # rjenkins1

item osd.9 weight 3.630

item osd.10 weight 3.630

item osd.11 weight 2.720

}

host selene-hdd {

id -5 # do not change unnecessarily

# weight 5.430

alg straw

hash 0 # rjenkins1

item osd.12 weight 1.810

item osd.13 weight 2.720

item osd.14 weight 0.900

}

host helios-hdd {

id -6 # do not change unnecessarily

# weight 3.050

alg straw

hash 0 # rjenkins1

item osd.15 weight 1.600

item osd.16 weight 0.700

item osd.17 weight 0.750

}

host erebe-hdd {

id -7 # do not change unnecessarily

# weight 7.250

alg straw

hash 0 # rjenkins1

item osd.19 weight 2.720

item osd.20 weight 1.810

item osd.21 weight 2.720

}

root hdd {

id -1 # do not change unnecessarily

# weight 40.780

alg straw

hash 0 # rjenkins1

item nyx-hdd weight 10.890

item eos-hdd weight 11.430

item hemara-hdd weight 9.980

item selene-hdd weight 5.430

item helios-hdd weight 3.050

item erebe-hdd weight 7.250

}

host nyx-ssd {

id -42 # do not change unnecessarily

# weight 0.460

alg straw

hash 0 # rjenkins1

item osd.0 weight 0.460

}

host eos-ssd {

id -43 # do not change unnecessarily

# weight 0.460

alg straw

hash 0 # rjenkins1

item osd.4 weight 0.460

}

host hemara-ssd {

id -44 # do not change unnecessarily

# weight 0.450

alg straw

hash 0 # rjenkins1

item osd.8 weight 0.450

}

host erebe-ssd {

id -45 # do not change unnecessarily

# weight 0.450

alg straw

hash 0 # rjenkins1

item osd.18 weight 0.450

}

root ssd {

id -41 # do not change unnecessarily

# weight 3.000

alg straw

hash 0 # rjenkins1

item nyx-ssd weight 1.000

item eos-ssd weight 1.000

item hemara-ssd weight 1.000

item erebe-ssd weight 1.000

}

# rules

rule hdd {

ruleset 0

type replicated

min_size 1

max_size 10

step take hdd

step chooseleaf firstn 0 type host

step emit

}

rule ssd {

ruleset 1

type replicated

min_size 1

max_size 10

step take ssd

step chooseleaf firstn 0 type host

step emit

}

# end crush map

Applying the new crush map, a 20 hours process started, moving placement groups.

# ceph-diskspace

/dev/sdc1 3.7T 2.0T 1.7T 55% /var/lib/ceph/osd/ceph-6

/dev/sda1 472G 330G 143G 70% /var/lib/ceph/osd/ceph-4

/dev/sdb1 3.7T 2.4T 1.4T 64% /var/lib/ceph/osd/ceph-5

/dev/sdd1 3.7T 2.4T 1.4T 65% /var/lib/ceph/osd/ceph-7

/dev/sda1 442G 329G 114G 75% /var/lib/ceph/osd/ceph-18

/dev/sdb1 2.8T 2.1T 668G 77% /var/lib/ceph/osd/ceph-19

/dev/sdc1 1.9T 1.3T 593G 69% /var/lib/ceph/osd/ceph-20

/dev/sdd1 2.8T 2.0T 808G 72% /var/lib/ceph/osd/ceph-21

/dev/sdc1 927G 562G 365G 61% /var/lib/ceph/osd/ceph-17

/dev/sdb1 927G 564G 363G 61% /var/lib/ceph/osd/ceph-16

/dev/sda1 1.9T 1.2T 630G 67% /var/lib/ceph/osd/ceph-15

/dev/sdb1 3.7T 2.8T 935G 75% /var/lib/ceph/osd/ceph-9

/dev/sdd1 2.8T 1.4T 1.4T 50% /var/lib/ceph/osd/ceph-11

/dev/sda1 461G 274G 187G 60% /var/lib/ceph/osd/ceph-8

/dev/sdc1 3.7T 2.2T 1.5T 60% /var/lib/ceph/osd/ceph-10

/dev/sdc1 3.7T 1.9T 1.8T 52% /var/lib/ceph/osd/ceph-1

/dev/sde1 3.7T 2.0T 1.7T 54% /var/lib/ceph/osd/ceph-3

/dev/sdd1 3.7T 2.3T 1.5T 62% /var/lib/ceph/osd/ceph-2

/dev/sdb1 472G 308G 165G 66% /var/lib/ceph/osd/ceph-0

/dev/sdb1 1.9T 1.2T 673G 64% /var/lib/ceph/osd/ceph-12

/dev/sdd1 927G 580G 347G 63% /var/lib/ceph/osd/ceph-14

/dev/sdc1 2.8T 2.0T 813G 71% /var/lib/ceph/osd/ceph-13

I’m really satisfied by the way ceph is constantly improving their product.

Having discussed with several interviewers in the last few weeks, I’m still having to explain why ceph rbd is not to be confused with cephfs, and if the latter may not be production ready, rados storage is just the thing you could be looking for distributing your storage.