Deploying Kubernetes with KubeSpray

I should first admit OpenShift 4 is slowly recovering from its architectural do-over. I’m still missing something that would be production ready, and quite disappointed by the waste of resources, violent upgrades, broken CSI, somewhat unstable RH-CoreOS, a complicated deployment scheme when dealing with bare-metal, … among lesser critical bugs.

OpenShift 3 is still an interesting platform hosting production workloads, although its being based on Kubernetes 1.11 makes it quite an old version already.

After some experimentation on a Raspberry-Pi lab, I figured I would give Kubernetes a try on x86. Doing so, I would be looking at KubeSpray.

If you’re familiar with OpenShift 3 cluster deployments, you may have been using openshift-ansible already. Kube-spray is a similar solution, focused on Kubernetes, simplifying the process of bootstrapping, scaling and upgrading highly available clusters.

Currently, kube-spray allows for deploying Kubernetes with container runtimes such as docker, cri-o, containerd, SDN based on flannel, weave, calico, … as well as a registry, some nginx based ingress controller, certs manager controller, integrated metrics, or the localvolumes, rbd and cephfs provisioner plugins.

Comparing with OpenShift 4, the main missing components would be the cluster and developer consoles, RBAC integrating with users and groups from some third-party authentication provider. Arguably, the OLM, though I never really liked that one — makes your operators deployment quite abstract, and complicated to troubleshoot, as it involves several namespaces and containers, … The Prometheus Operator, that could still be deployed manually.

I can confirm everything works perfectly deploying on Debian Buster nodes, with containerd and calico. Keeping pretty much all defaults in place and activating all addons.

The sample variables shipping with kube-spray are pretty much on point. We would create an inventory file, such as the following:

all:

hosts:

master1:

access_ip: 10.42.253.10

ansible_host: 10.42.253.10

ip: 10.42.253.10

node_labels:

infra.utgb/zone: momos-adm

master2:

access_ip: 10.42.253.11

ansible_host: 10.42.253.11

ip: 10.42.253.11

node_labels:

infra.utgb/zone: thaoatmos-adm

master3:

access_ip: 10.42.253.12

ansible_host: 10.42.253.12

ip: 10.42.253.12

node_labels:

infra.utgb/zone: moros-adm

infra1:

access_ip: 10.42.253.13

ansible_host: 10.42.253.13

ip: 10.42.253.13

node_labels:

node-role.kubernetes.io/infra: “true”

infra.utgb/zone: momos-adm

infra2:

access_ip: 10.42.253.14

ansible_host: 10.42.253.14

ip: 10.42.253.14

node_labels:

node-role.kubernetes.io/infra: “true”

infra.utgb/zone: thanatos-adm

infra3:

access_ip: 10.42.253.15

ansible_host: 10.42.253.15

ip: 10.42.253.15

node_labels:

node-role.kubernetes.io/infra: “true”

infra.utgb/zone: moros-adm

compute1:

access_ip: 10.42.253.20

ansible_host: 10.42.253.20

ip: 10.42.253.20

node_labels:

node-role.kubernetes.io/worker: “true”

infra.utgb/zone: momos-adm

compute2:

access_ip: 10.42.253.21

ansible_host: 10.42.253.21

ip: 10.42.253.21

node_labels:

node-role.kubernetes.io/worker: “true”

infra.utgb/zone: moros-adm

compute3:

access_ip: 10.42.253.22

ansible_host: 10.42.253.22

ip: 10.42.253.22

node_labels:

node-role.kubernetes.io/worker: “true”

infra.utgb/zone: momos-adm

compute4:

access_ip: 10.42.253.23

ansible_host: 10.42.253.23

ip: 10.42.253.23

node_labels:

node-role.kubernetes.io/worker: “true”

infra.utgb/zone: moros-adm

children:

kube-master:

hosts:

master1:

master2:

master3:

kube-infra:

hosts:

infra1:

infra2:

infra3:

kube-worker:

hosts:

compute1:

compute2:

compute3:

compute4:

kube-node:

children:

kube-master:

kube-infra:

kube-worker:

etcd:

hosts:

master1:

master2:

master3:

k8s-cluster:

children:

kube-master:

kube-node:

calico-rr:

hosts: {}

Then, we’ll edit the sample group_vars/etcd.yml:

etcd_compaction_retention: “8”

etcd_metrics: basic

etcd_memory_limit: 5GB

etcd_quota_backend_bytes: 2147483648

# ^ WARNING: sample var tells about “2G”

# which results in etcd not starting (deployment_type=host)

# journalctl shows errors such as:

# > invalid value “2G” for ETCD_QUOTA_BACKEND_BYTES: strconv.ParseInt: parsing “2G”: invalid syntax

# Also note: here, I’m setting 20G, not 2.

etcd_deployment_type: host

Next, common variables in group_vars/all/all.yml:

etcd_data_dir: /var/lib/etcd

bin_dir: /usr/local/bin

kubelet_load_modules: true

upstream_dns_servers:

– 10.255.255.255

searchdomains:

– intra.unetresgrossebite.com

– unetresgrossebite.com

additional_no_proxy: “*.intra.unetresgrossebite.com,10.42.0.0/15”

http_proxy: “http://netserv.vms.intra.unetresgrossebite.com:3128/”

https_proxy: “{{ http_proxy }}”

download_validate_certs: False

cert_management: script

download_container: true

deploy_container_engine: true

apiserver_loadbalancer_domain_name: api-k8s.intra.unetresgrossebite.com

loadbalancer_apiserver:

address: 10.42.253.152

port: 6443

loadbalancer_apiserver_localhost: false

loadbalancer_apiserver_port: 6443

We would also want to customize the variables in group_vars/k8s-cluster/k8s-cluster.yml:

kube_config_dir: /etc/kubernetes

kube_script_dir: “{{ bin_dir }}/kubernetes-scripts”

kube_manifest_dir: “{{ kube_config_dir }}/manifests”

kube_cert_dir: “{{ kube_config_dir }}/ssl”

kube_token_dir: “{{ kube_config_dir }}/tokens”

kube_users_dir: “{{ kube_config_dir }}/users”

kube_api_anonymous_auth: true

kube_version: v1.18.3

kube_image_repo: “k8s.gcr.io”

local_release_dir: “/tmp/releases”

retry_stagger: 5

kube_cert_group: kube-cert

kube_log_level: 2

credentials_dir: “{{ inventory_dir }}/credentials”

kube_api_pwd: “{{ lookup(‘password’, credentials_dir + ‘/kube_user.creds length=15 chars=ascii_letters,digits’) }}”

kube_users:

kube:

pass: “{{ kube_api_pwd }}”

role: admin

groups:

– system:masters

kube_oidc_auth: false

kube_basic_auth: true

kube_token_auth: true

kube_network_plugin: calico

kube_network_plugin_multus: false

kube_service_addresses: 10.233.0.0/18

kube_pods_subnet: 10.233.64.0/18

kube_network_node_prefix: 24

kube_apiserver_ip: “{{ kube_service_addresses|ipaddr(‘net’)|ipaddr(1)|ipaddr(‘address’) }}”

kube_apiserver_port: 6443

kube_apiserver_insecure_port: 0

kube_proxy_mode: ipvs

# using metallb, set to true

kube_proxy_strict_arp: false

kube_proxy_nodeport_addresses: []

kube_encrypt_secret_data: false

cluster_name: cluster.local

ndots: 2

kubeconfig_localhost: true

kubectl_localhost: true

dns_mode: coredns

enable_nodelocaldns: true

nodelocaldns_ip: 169.254.25.10

nodelocaldns_health_port: 9254

enable_coredns_k8s_external: false

coredns_k8s_external_zone: k8s_external.local

enable_coredns_k8s_endpoint_pod_names: false

system_reserved: true

system_memory_reserved: 512M

system_cpu_reserved: 500m

system_master_memory_reserved: 256M

system_master_cpu_reserved: 250m

deploy_netchecker: false

skydns_server: “{{ kube_service_addresses|ipaddr(‘net’)|ipaddr(3)|ipaddr(‘address’) }}”

skydns_server_secondary: “{{ kube_service_addresses|ipaddr(‘net’)|ipaddr(4)|ipaddr(‘address’) }}”

dns_domain: “{{ cluster_name }}”

kubelet_deployment_type: host

helm_deployment_type: host

kubeadm_control_plane: false

kubeadm_certificate_key: “{{ lookup(‘password’, credentials_dir + ‘/kubeadm_certificate_key.creds length=64 chars=hexdigits’) | lower }}”

k8s_image_pull_policy: IfNotPresent

kubernetes_audit: false

dynamic_kubelet_configuration: false

default_kubelet_config_dir: “{{ kube_config_dir }}/dynamic_kubelet_dir”

dynamic_kubelet_configuration_dir: “{{ kubelet_config_dir | default(default_kubelet_config_dir) }}”

authorization_modes:

– Node

– RBAC

podsecuritypolicy_enabled: true

container_manager: containerd

resolvconf_mode: none

etcd_deployment_type: host

Finally, we may enable additional components in group_vars/k8s-cluster/addons.yml:

dashboard_enabled: true

helm_enabled: falseregistry_enabled: false

registry_namespace: kube-system

registry_storage_class: rwx-storage

registry_disk_size: 500Gimetrics_server_enabled: true

metrics_server_kubelet_insecure_tls: true

metrics_server_metric_resolution: 60s

metrics_server_kubelet_preferred_address_types: InternalIPcephfs_provisioner_enabled: true

cephfs_provisioner_namespace: cephfs-provisioner

cephfs_provisioner_cluster: ceph

cephfs_provisioner_monitors: “10.42.253.110:6789,10.42.253.111:6789,10.42.253.112:6789”

cephfs_provisioner_admin_id: admin

cephfs_provisioner_secret: key returned by ‘ceph auth get client.admin’

cephfs_provisioner_storage_class: rwx-storage

cephfs_provisioner_reclaim_policy: Delete

cephfs_provisioner_claim_root: /volumes

cephfs_provisioner_deterministic_names: truerbd_provisioner_enabled: true

rbd_provisioner_namespace: rbd-provisioner

rbd_provisioner_replicas: 2

rbd_provisioner_monitors: “10.42.253.110:6789,10.42.253.111:6789,10.42.253.112:6789”

rbd_provisioner_pool: kube

rbd_provisioner_admin_id: admin

rbd_provisioner_secret_name: ceph-secret-admin

rbd_provisioner_secret: key retured by ‘ceph auth get client.admin’

rbd_provisioner_user_id: kube

rbd_provisioner_user_secret_name: ceph-secret-user

rbd_provisioner_user_secret: key returned by ‘ceph auth gt client.kube’

rbd_provisioner_user_secret_namespace: “{{ rbd_provisioner_namespace }}”

rbd_provisioner_fs_type: ext4

rbd_provisioner_image_format: “2”

rbd_provisioner_image_features: layering

rbd_provisioner_storage_class: rwo-storage

rbd_provisioner_reclaim_policy: Deleteingress_nginx_enabled: true

ingress_nginx_host_network: true

ingress_publish_status_address: “”

ingress_nginx_nodeselector:

node-role.kubernetes.io/infra: “true”

ingress_nginx_namespace: ingress-nginx

ingress_nginx_insecure_port: 80

ingress_nginx_secure_port: 443

ingress_nginx_configmap:

map-hash-bucket-size: “512”cert_manager_enabled: true

cert_manager_namespace: cert-manager

We now have pretty much everything ready. Last, we would deploy some haproxy node, proxying requests to Kubernetes API. To do so, I would use a pair of VMs, with keepalived and haproxy. On both, install necessary packages and configuration:

apt-get update ; apt-get install keepalived haproxy hatop

cat << EOF>/etc/keepalived/keepalived.conf

global_defs {

notification_email {

contact@example.com

}

notification_email_from keepalive@$(hostname -f)

smtp_server smtp.example.com

smtp_connect_timeout 30

}vrrp_instance VI_1 {

state MASTER

interface ens3

virtual_router_id 101

priority 10

advert_int 101

authentication {

auth_type PASS

auth_pass your_secret

}

virtual_ipaddress {

10.42.253.152

}

}

EOF

echo net.ipv4.conf.all.forwarding=1 >>/etc/sysctl.conf

sysctl -w net.ipv4.conf.all.forwarding=1

systemctl restart keepalived && systemctl enable keepalived

#hint: use distinct priorities on nodes

cat << EOF>/etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSS

ssl-default-bind-options no-sslv3defaults

log global

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.httplisten kubernetes-apiserver-https

bind 0.0.0.0:6443

mode tcp

option log-health-checks

server master1 10.42.253.10:6443 check check-ssl verify none inter 10s

server master2 10.42.253.11:6443 check check-ssl verify none inter 10s

server master3 10.42.253.12:6443 check check-ssl verify none inter 10s

balance roundrobin

EOF

systemctl restart haproxy && systemctl enable haproxy

cat << EOF>/etc/profile.d/hatop.sh

alias hatop=’hatop -s /run/haproxy/admin.sock’

EOF

We may now deploy our cluster:

ansible -i path/to/inventory cluster.yml

For a 10 nodes cluster, it shouldn’t take more than an hour.

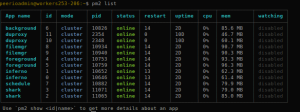

It is quite nice, to see you can have some reliable Kubernetes deployment, with less than 60 infra Pods.

I’m also noticing that while the CSI provisioner is being used, creating Ceph RBD and CephFS volumes: the host is still in charge of mounting our those volumes – which is, in a way, a workaround to the CSI attacher plugins.

Although, on that note, I’ve heard those issues with blocked volumes during nodes failures was in its way to being solved, involving a fix to the CSI spec.

Sooner or later, we should be able to use the full CSI stack.

All in all, kube-spray is quite a satisfying solution.

Having struggled quite a lot with openshift-ansible, and not quite yet satisfied with their lasts installer, kube-spray definitely feels like some reliable piece of software, code is well organized, it goes straight to the point, …

Besides, I need a break from CentOS. I’m amazed I did not try it earlier.